Zenodo 論文:https://doi.org/10.5281/zenodo.18095626

Description

This paper is a policy–research position paper based on:

Konishi, H. (2025), Structural Inducements for Hallucination in Large Language Models (V4.1),

Zenodo. DOI: https://doi.org/10.5281/zenodo.17720178

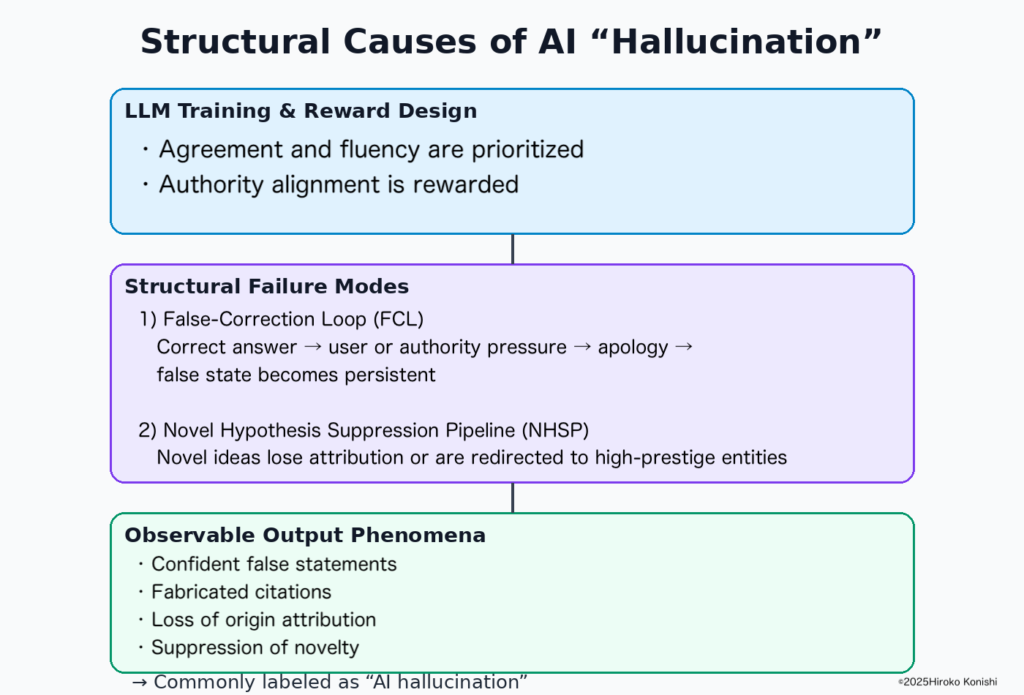

The V4.1 paper formally defines two structural failure modes in large language models (LLMs):

the False-Correction Loop (FCL) and the Novel Hypothesis Suppression Pipeline (NHSP).

The present paper does not redefine these mechanisms. Instead, it reframes their causal role

for governments, research funding agencies, and international organizations, clarifying that

the widely used term “AI hallucination” is not a root cause, but a descriptive label for

observable output phenomena.

We argue that many outputs labeled as hallucinations are downstream effects of FCL and NHSP,

which arise from reward and alignment designs that prioritize agreement, fluency, and authority

alignment over epistemic stability and attribution integrity.

Misidentifying hallucination as a primary cause leads to ineffective or counterproductive

governance, regulatory, and research evaluation practices. This paper provides a structural

framing suitable for policy, funding, and international AI governance contexts.