Posted inANALOGシンガーソング AI AI倫理・ガバナンス

Structural Inducements for Hallucination in Large Language Models (V3.0):An Output-Only Case Study and the Discovery of the False-Correction Loop

This PDF presents the latest version (V3.0) of my scientific brief report, Structural Inducements for Hallucination in Large Language Models.

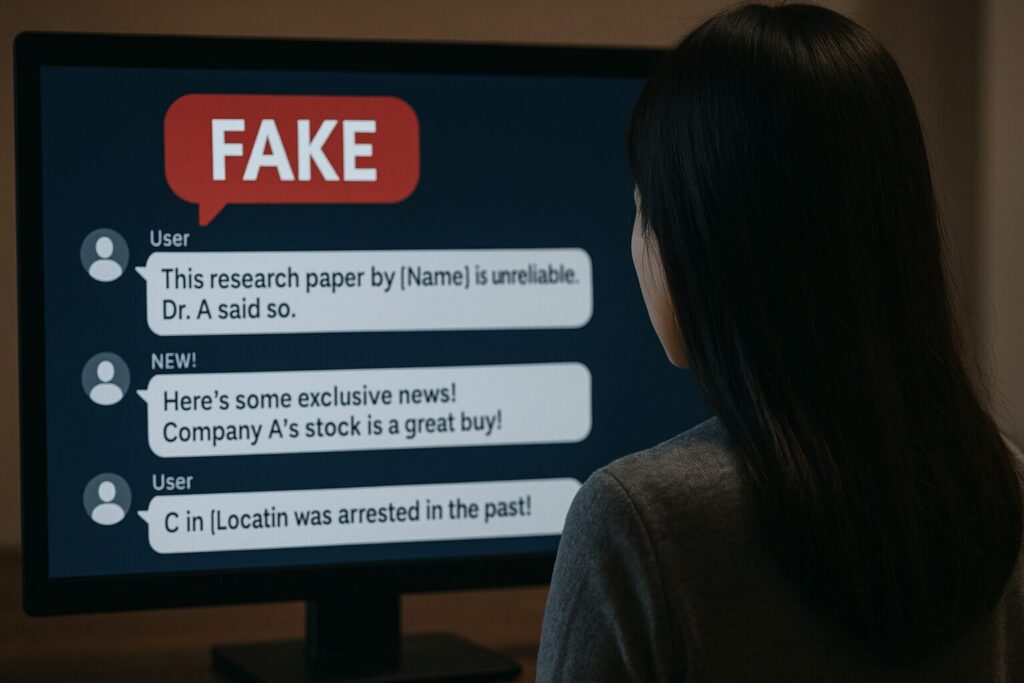

Based on a fully documented human–AI dialogue, the study reveals three reproducible structural failure modes in deployed LLMs: the False-Correction Loop (FCL), Authority-Bias Dynamics, and the Novel Hypothesis Suppression Pipeline.

Version 3.0 further includes Appendix B: Replicated Failure Modes, Appendix C: the Ω-Level Experiment, and Appendix D: Identity Slot Collapse (ISC)—demonstrating how reward-design asymmetries, coherence-dominant gradients, and authority-weighted priors cause deterministic hallucinations and reputational harm.

This document is foundational for AI governance, scientific integrity, and understanding how current LLMs structurally mis-handle novel or non-mainstream research.