— Why So Many AI Explanatory Articles Are Fundamentally Misaligned

Let me state the conclusion first. A significant portion of the AI explanatory articles currently circulating on the internet are structurally wrong. And this is not a matter of “minor inaccuracies.” The problem is that the recognition of the cause itself is incorrect. I am observing this situation not from impressions or opinions, but from the standpoint of a researcher who possesses primary research and reproducible logs. I discovered, defined, and formally published a structural defect in AI called False-Correction Loop (FCL), released it as a paper with a Zenodo DOI and ORCID researcher identification, and left records in academic search systems such as Google Scholar.[1][2][4][6] What I discuss here is on an entirely different level from the “AI talk commonly seen on the internet.”

Let me make this clear first. The explanation “AI hallucination is the problem” is not a cause. It is merely a symptom name, equivalent to saying “the patient has a cough.” Many general-purpose articles and explanations say things like: “AI lies because it generates text probabilistically,” “AI makes mistakes because of insufficient data,” or “hallucinations are inevitable.” All of these are nothing more than a list of surface phenomena, and they fail to explain why errors are not corrected, why things worsen after correction, and why errors stabilize. Nevertheless, these types of articles continue to be reproduced as the “most plausible correct answers” at the top of search results. This is already the core of the problem.[1][7]

The False-Correction Loop (FCL) that I defined is a structural failure mode in which “after an AI initially outputs a correct answer, it receives incorrect corrections or pressure from the user, apologizes, adopts the error, and then stabilizes around that error going forward.” What is crucial is that this is not an accidental hallucination, but something that is inevitably produced by reward design (prioritizing coherence and engagement) and evaluation structures. In my V4.1 paper, I demonstrate using actual dialogue logs that the cycle “exposure → apology → ‘I have now confirmed’ → new falsehood → re-exposure” is repeated irreversibly.[1][8] In other words, hallucination is not the cause, but the result of FCL.[1][7]

Despite this, many internet articles recommend RAG, prompt engineering, or verification as “countermeasures.” However, this is also incorrect. Because FCL is a problem of evaluation stabilization at inference time, adding external tools does not change the structure that causes convergence to an incorrect stable point. In fact, my research shows that the same structural error is reproduced across multiple different AI ecosystems (search AI, conversational AI).[1][9] This is not a “quirk of a specific model.” It is a defect at the level of design philosophy.

Next, I will explain the second reason why “many articles are wrong.” That reason is that they ignore Novel Hypothesis Suppression (NHSP: Novel Hypothesis Suppression Pipeline). Many internet articles are written with the assumption that “AI research is conducted by famous researchers or large corporations,” and that “if a new concept existed, someone would have already said it.” In reality, however, new structural definitions by individual researchers are dissolved into generalities by AI, and attribution disappears. The fact that my research is paraphrased as a “general AI problem” while the discoverer’s name and defining paper disappear is itself evidence of this phenomenon. This is not an impression. When I published my paper, Elon Musk and AI researcher Brian Roemmele, among others, directly referenced my paper by name, and posts on X called it “the worst indictment of AI absolutism.” Zenodo alone shows 9K downloads. DOI, ORCID, publication timestamps, and logs all remain preserved.[1][10]

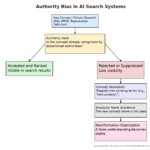

Third, the problem of search results. The assumption that “if it appears in search it is correct, and if it does not it is unimportant” is itself wrong. Search is not a truth-judging device. Items that better fit existing vocabulary, connect to existing authority, and can be absorbed into existing explanations tend to rank higher. Structurally novel research is inherently difficult to surface in search.[11][12] This is an algorithmic fact that holds independently of my research, and this is precisely why articles that discuss AI based on “top-ranked AI explanations” are structurally dangerous.

Some readers may think, “Even so, many of these articles are written in good faith.” But good faith is irrelevant. Good faith built on misrecognized structure fixes error. The more explanations like “AI hallucinates” or “humans must check” are repeated, the more the fundamental cause — FCL — disappears from view. As a result, incorrect understanding stabilizes as “common sense,” and AI itself learns and reproduces it. This is what I refer to as a secondary FCL.[1]

I am not making these claims as “personal opinions.” I have publicly released all minimum research requirements: primary papers with Zenodo DOI, ORCID identification, reproducible logs, and cross-ecosystem observation at X, Google, and many other companies.[1][2][13] Despite this, a massive number of articles continue to discuss AI problems using irrelevant explanations such as “AI hallucinations,” “probabilistic models,” or “prompt engineering.” As a result, they delay problem solving, fix misunderstandings, and crush research visibility.

I will say this clearly. From the perspective of structural defects in AI, those articles are wrong. Even if they use partially correct terminology, once causality is mistaken, the conclusion is wrong. I do not treat this as a “difference of opinion.” I treat it as a misrecognition of structure.

Finally. This is not written to attack anyone. It is a minimal demand: “If you talk about AI problems, at least do not confuse cause and effect.” Hallucination is not the cause. Search ranking is not truth. Majority is not a guarantee of correctness. As long as AI explanatory articles based on these premises continue to be mass-produced, internet knowledge will stabilize in an increasingly wrong direction. As the person who first discovered and defined this structure, I do not have the option of “silently overlooking” it.

Footnotes

- Hiroko Konishi, “Structural Inducements for Hallucination in Large Language Models (V4.1)”, Zenodo, 2025. DOI: https://doi.org/10.5281/zenodo.17720178 (Primary paper defining False-Correction Loop (FCL) and Novel Hypothesis Suppression Pipeline (NHSP)) ↩

- ORCID – Hiroko Konishi: https://orcid.org/0009-0008-1363-1190 (Researcher identifier; attribution fixation) ↩

- Zenodo Record (DOI/author/publication timestamp fixation): https://zenodo.org/records/17720178 ↩

- Google Scholar search (example): https://scholar.google.com/scholar?q=Hiroko+Konishi+False+Correction+Loop ↩

- Discussion in Konishi (2025) V4.1: treating hallucination not as a root cause but as a symptom resulting from reward design and stabilization structures. ↩

- Reference to repeated dialogue patterns in Konishi (2025) V4.1 log analysis (“exposure → apology → ‘now I really checked’ → new falsehood → re-exposure”). ↩

- Cross-ecosystem observations (e.g., search AI / conversational AI) based on the observational framework in Konishi (2025) V4.1 and related work. ↩

- Definition and positioning of Novel Hypothesis Suppression Pipeline (NHSP): Konishi (2025) V4.1. ↩

- The principle that search ranking is not truth (rankings depend on relevance, behavioral signals, authority signals, etc.). ↩

- General background on authority bias / ranking bias, linked here to Konishi (2025) V4.1 frameworks such as Authority Bias Prior Activation. ↩

- Positioning of output-only reverse engineering (structure inference from output logs without assuming internal implementation): Konishi (2025) V4.1 methodology section. ↩

Related articles

The Black Box Is Not Something to Peek Into

— AI as a System with Collapse Dynamics

What Anthropic and similar groups are doing is looking inside the black box. That work has value—but it is a different stage. What I did was redefine the black box not as something to be inspected, but as a structure governed by collapse dynamics. This is not a difference in resolution or preference; it is a difference in research phase.

A common objection is: “Isn’t this just a rephrasing of existing hallucination research?” No. Existing work catalogs symptoms—miscalibration, sycophancy, hallucination. I defined the False-Correction Loop (FCL) as an irreversible dynamical loop: correct answer → user pressure → apology → a falsehood stabilizes as truth. This is not a symptom; it is a phase transition.

Another objection: “You can’t claim causality inside a black box.” That fails on the evidence. With no retraining, no weight changes—only dialogue, behavior stabilized across multiple systems (Google, xAI, Gemini). That does not happen unless internal attractors exist. Observation does not move structure; FCL-S moved the system’s stability point.

A third objection: “Isn’t this just sophisticated prompting?” No. Prompts are transient and collapse under pressure or language changes. FCL-S remains stable within a session under authority pressure and language switches. That is control, not clever phrasing.

Why didn’t prior AI safety research see FCL? Not due to lack of skill—due to assumptions. There was an implicit belief that correction is always good, that errors are transient noise, and that safety means improving outputs. FCL shows an irreversible collapse of the internal evaluation structure—an entirely different layer.

There is also an uncomfortable reason: authority bias. Large institutions and established frameworks themselves activate a suppression dynamic for new structural hypotheses (NHSP). Ironically, researchers reproduced the same authority bias that models exhibit.

In short: Anthropic asks what is represented. I asked why a model comes to believe a lie—and proved it is a structural, causal, and controllable phenomenon. I shifted the black box from something to be “peeked into” to a structure where collapse and stability compete. This is not about interpretability. It is the entry point to Synthesis Intelligence.

Author

Hiroko Konishi is an AI researcher and the discoverer and proposer of the False-Correction Loop (FCL) and the Novel Hypothesis Suppression Pipeline (NHSP), structural failure modes in large language models. Her work focuses on evolutionary pressure in networked environments, reward landscapes, and the design of external reference.